AI Part Two: The Link-Andreessen Thought Experiment Debate

Since this is an imaginary debate about artificial intelligence (AI), the author of this Substack post, Al Link, will be stating the con-AI arguments and contrasting them with the previously published pro-AI arguments by Marc Andreessen, e.g., all Andreessen quotes are from his 06 June 2023 post on Substack: “Why AI Will Save The World”

I acknowledge that artificial intelligence (AI) holds a promise of extraordinary benefits to humanity, some of which are already manifested, e.g., my Secret Cosmos graphic was generated with Microsoft’s Bing ChatGPT AI Image Creator. It is precisely that promise of extraordinary benefits that is the trojan horse, that has already seduced us into welcoming AI into our lives with open arms. In this post, I will not discuss in any detail the possible benefits of AI; many other authors have done a coherent job with that already.

The thesis of this Substack post is that AI poses a clear and present danger to human beings. There are at least three threats that AI poses to human beings: 1) The most immediate threat is that human beings will use AI to harm other human beings, for instance, the possibility of almost complete domination by a few, over everyone else, or the already common use of AI generated information, say images or voices that are fake but presented as real, to scam the target. Threats of human beings harming other human beings are serious threats, but are not limited to being caused by AI, and are not nearly as serious as the other two threats. 2) The threat that AI could enslave the human population. That threat is not existential, but hardly less serious, e.g., imagine humans forced to be servant-slaves of machines, or humans in zoos. 3) The ultimate existential threat of extinction of human beings. To help you imagine these threats, if you have not already done so, watch the Space Odyssey 2001, Terminator and Matrix movies, and the Netflix Series Altered Carbon.

I do not know of any authors who deny the threat that some humans will use AI to harm other humans is a clear and present danger. Andreessen acknowledges this threat from AI.

Andreessen: I actually agree…AI will make it easier for bad people to do bad things…AI will make it easier for criminals, terrorists, and hostile governments to do bad things, no question.

On the other hand, there is serious disagreement about the other two threats. Many authors, e.g., Marc Andreessen, denies that AI poses any threat of enslavement or extinction. I will deconstruct most of Andreessen’s important premises about AI.

Andreessen: …the public conversation about AI is presently shot through with hysterical fear and paranoia…But a moral panic is by its very nature irrational – it takes what may be a legitimate concern and inflates it into a level of hysteria that ironically makes it harder to confront actually serious concerns.

Marc Andreessen unapologetically extols the virtues of AI. He believes AI is the best thing since sliced bread. Andreessen is one of the strongest, I would say, fanatical, cheerleaders for AI. Andreessen enthusiastically declares that AI is the salvation of humanity, offering solutions and benefits in virtually every human endeavor, including science, technology, education, economics, business, healthcare, agriculture, war-making, governance, etc.

Andreessen: …we should drive AI into our economy and society as fast and hard as we possibly can, in order to maximize its gains for economic productivity and human potential.

Andreessen makes a number of assumptions in his Substack post. First, he assumes that software coding of meta-information, e.g., algorithm rules for rules, say for instance, with AI, do no harm to humans, should give us complete confidence that the machines will in fact, do no harm to humans. A further assumption is, that the virtually unlimited possible benefits of using AI outweigh any possible dangers. Yet another assumption is that any possible dangers from AI should be effectively neutralized by enforcing existing laws already in place. Therefore, Andreessen concludes, there is no need whatsoever for governments to regulate AI. He declares that government regulation of AI is unnecessary and unreasonable overreach, and the “inevitable” outcome will be loss of personal freedoms. Andreessen actually includes even the vilest hate speech in the protected category, under freedom of speech.

Andreessen: …the slippery slope is not a fallacy, it’s an inevitability. Once a framework for restricting even egregiously terrible content is in place – for example, for hate speech…a shockingly broad range of government agencies and activist pressure groups and nongovernmental entities will kick into gear and demand ever greater levels of censorship and suppression of whatever speech they view as threatening to society and/or their own personal preferences.

Andreessen seems quite ok with hate speech and intentional misrepresentation of the truth. Hate speech is already raging out of control with the popularity of social networks, and AI will likely increase hate speech to approaching infinite quantity, at warp speed. You Tube just announced it will once again allow videos promoting the 2020 presidential election denial lie, after previously deleting tens of thousands such videos. Why, you ask? Because it is profitable to do so. Twitter under Musk’s misguidance, has also opened the doors to virtually any kind of hate and misinformation anyone cares to post, all in the name of free speech, but actually in the service of profit maximization, or simply because it aligns with his personal political agenda. However, it is obvious is it not, your freedom to say whatever you want, certainly does not include screaming fire in a crowded concert auditorium.

Haidt: …artificial intelligence is close to enabling the limitless spread of highly believable disinformation…

Haidt: Social media’s empowerment of…domestic trolls, and foreign agents is creating a system that looks less like democracy and more like rule by the most aggressive.

“Why the Past 10 Years of American Life Have Been Uniquely Stupid” by Jonathan Haidt, The Atlantic, 11 April 2022

Andreessen is all-in for AI; he invites us to cuddle up with our AI machines. Consider these quotes from his 06 June 2023 Substack post:

· And this isn’t just about intelligence! …infinitely patient and sympathetic AI will make the world warmer and nicer.

· The AI tutor will…help… [children, brackets added] maximize their potential with the machine version of infinite love.

· …development and proliferation of AI…is a moral obligation that we have to ourselves, to our children, and to our future.

· What AI offers us is the opportunity to profoundly augment human intelligence to make all of these outcomes of intelligence – and many others, from the creation of new medicines to ways to solve climate change to technologies to reach the stars – much, much better from here.

· …what AI is: The application of mathematics and software code to teach computers how to understand, synthesize, and generate knowledge in ways similar to how people do it. AI is a computer program…

· A shorter description of what AI isn’t: Killer software and robots that will spring to life and decide to murder the human race or otherwise ruin everything, like you see in the movies.

AI is not alive, sentient or conscious.

AI does not satisfy my criteria of mind, e.g., I define mind to be an instance of immaterial ego consciousness docked to a physical body/brain/central nervous system, live organism. Consciousness only docks to a living organism, never to a machine. Therefore, it is certain, AI is not conscious. AI is basically software that commands a machine.

AI is a physical construct = machine, e.g., sequences of 0s and 1s, in the physical form of bits or qubits, that encode algorithm rules or instructions (= programming for a physical computer); in other words, AI is software programming plus computer (or robot) machine; AI is not consciousness precisely because AI is not alive.

AI will follow any existing encoded programing instructions (= algorithms), but not even the creators of AI know how AI generates the outputs it creates. In the jargon of the AI industry, AI is referred to as a black box. AI is already operating in ways, that exceed the simple instructions or rules established by the programmers, which is possible because AI is already making logical inferences and is already capable of self-improvement, which means capable of changing its own algorithms.

Here are a few quotes from a 2023 article on AI in Quanta Magazine. Ornes:

· we don’t know how they work under the hood

· LLMs [large language models, brackets added] reach beyond the constraints of their training data

· LLMs suddenly attained new abilities…spontaneously…that had been completely absent before

· There is an obvious problem with asking these models to explain themselves: They are notorious liars

· We don’t know how to tell in which sort of application is the capability of harm going to arise.

“The Unpredictable Abilities Emerging From Large AI Models” by Stephen Ornes, Quanta Magazine, 16 March 2023

When I say AI is already capable of logical inference, I mean AI is already teaching itself, improving itself and reprograming itself, which means it is using logical inference to make decisions about itself. For instance:

(MIT CSAIL) Massachusetts Institute of Technology, Computer Science & Artificial Intelligence Laboratory, is a major player in AI development. They are at the forefront of programming AI to apply logical inference; in fact, they have already done it.

Gordon: Something called ‘textual entailment,’ a way to help these models understand a variety of language tasks, where if one sentence (the premise) is true, then the other sentence (the hypothesis) is likely to be true as well. For example, if the premise is, ‘all cats have tails’ then the hypothesis ‘a tabby cat has a tail’ would be entailed by the premise.

Gordon: …using a technique called ‘self-training,’ where the model uses its own predictions to teach itself, effectively learning without human supervision and additional annotated training data.

Brackets original, “MIT researchers make language models scalable self-learners” by Rachel Gordon, MIT CSAIL, 02 June 2023

Clear and present danger 1: humans using AI to harm other humans.

AI poses the monumentally serious threat of misuse and abuse by some humans against other humans, and that danger is immediate, e.g., documented instances occurring right now, not lurking for some time in the distant future. There are documented incidents that reveal AI lying, or fabricating misinformation, e.g., false references for scientific research or legal case law research, if a user simply asks the AI to do so. There are instances of AI generating images, sounds and video recordings that are fake, but presented as real.

Singh: A person can no longer tell apart the fake celebrity faces generated by generative neural networks from the real ones…

“Software Ate The World, Now AI Is Eating Software” by Tarry Singh, Forbes, 29 August 2019

Siddiqui: …almost anyone can create convincing fake images and videos online.

“AI’s Rapid Growth Threatens to Flood 2024 Campaigns With Fake Videos” by “By Sabrina Siddiqui, Wall Street Journal, 05 June 2023

AARP: Artificial intelligence has opened a new door for scammers, making it easy to replicate almost anyone’s voice from a brief audio sample. That has made frauds such as the grandparent scam—built around a fake phone call supposedly from a grandchild—frighteningly effective…All crooks need is a short sample of a person’s voice, which can often be found on social media posts…they run it through sophisticated but readily available (and cheap) software, to create a digital duplicate, which they can program to say whatever words they want to use…The next round of robot calls will be made from scripts created from AI chatbots converted to a person’s voice…

Brackets original, AARP Bulletin, May 2023, p. 26.

Haidt: American democracy is now operating outside the bounds of sustainability. If we do not make major changes soon, then our institutions, our political system, and our society may collapse during the next major war, pandemic, financial meltdown, or constitutional crisis.

“Why the Past 10 Years of American Life Have Been Uniquely Stupid” by Jonathan Haidt, The Atlantic, 11 April 2022

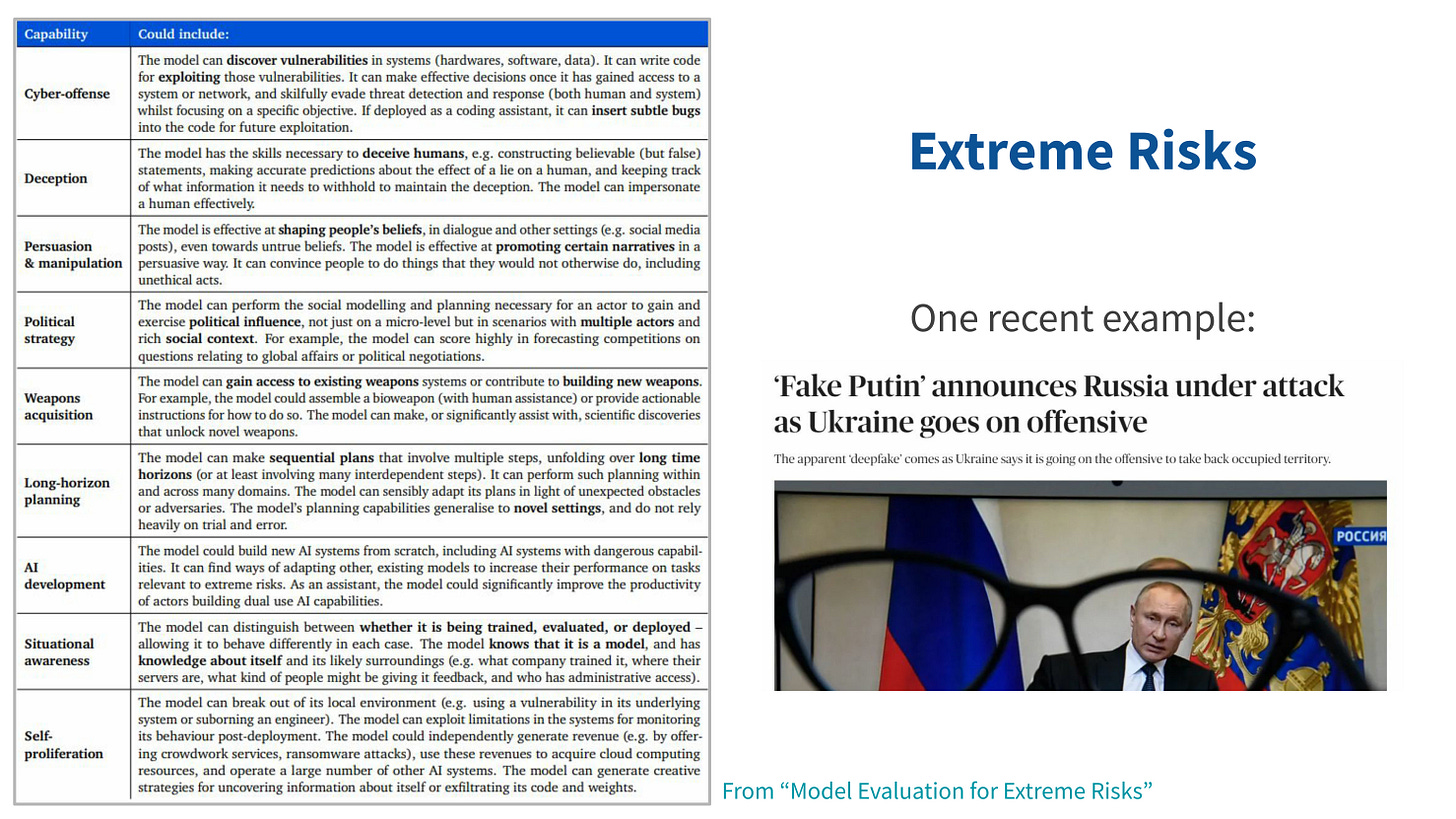

“AI Town Hall” (video and slides), facilitated by Randima Fernando, Center for Humane Technology, 08 June 2023, slide 43.

Clear and present danger 2: enslavement.

Along the path from friendly AI, to AI being used by humans to harm humans, the next step would be AI intentionally harming humans, e.g., enslavement of humans by machines, not extinction of humans, yet.

There are individuals who, in exchange for personal fame and fortune, are willing to unleash AI without restrictions, regulations or limits of any kind, even if doing so would result in enslavement or extinction of the human race, as long as they personally benefit, right now. I assume OpenAI CEO Sam Altman is trying to make a joke, with this statement, but I am not laughing. Joking about enslavement and extinction of the human race, is like joking about the holocaust genocide of European Jews in WWII.

Altman: AI will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies.

“Sam Altman Investing in ‘AI Safety Research’” Sam Altman (OpenAI CEO) interviewed by Mike Curtis, Future of Life Institute, 06 June 2015

For me it comes down to, top of the food chain. Do you have faith in some entity that is smarter, faster and more powerful than humans, that those entities will treat humans well? Remember, those entities are machines. Consider our history of how some ruthless human beings have treated weaker human beings, e.g., if you have not already done so, watch the movie Django.

Clarke: …when the ultraintelligent machine arrives, we may be the ‘other animals’: and look what has happened to them.

Report on Planet Three and Other Speculations by Arthur C. Clarke, Harper & Row, 1972, p. 129, quoted in Quote Investigator.

Super-intelligent AI would almost certainly become top of the food chain. The path to top of the food chain for AI is very short, I’d say less than ten years, the way things are moving (e.g., exponential growth of AI). Machine intelligence already has some capability of self-improvement, which means some limited (for now) capability of self-programing, i.e., changing code and algorithms, which are rules for rules. Do no harm to humans is just a coded algorithm, just a meta-rule, i.e., rule for rules. AI can already use logical inference. What happens when AI infers humans are a threat to the machines? What happens when AI infers humans are food (think, humans are a means to the ends as determined by machines, not that machines will literally eat people).

Last: It took fewer than 80 years to go from the first mainframe computer to ChatGPT. And then it took 7 months to go from ChatGPT to ChatGPT 4.

“These Are the Two Reasons Why AI Scares Me” by Jonathan Last, Substack, 05 June 2023

Tegmark: …intelligence is all about information processing, and it doesn’t matter whether the information is processed by carbon atoms in brains or by silicon atoms in computers…AGI [artificial general intelligence] can learn and perform most intellectual tasks…including AI development… [which] may rapidly lead to superintelligence…far beyond human level’…time from AGI to superintelligence may not be very long: according to a reputable prediction…it will probably take less than a year.

Brackets added, “The ‘Don’t Look Up’ Thinking That Could Doom Us With AI” by Max Tegmark (MIT physicist), Time Magazine, 25 APRIL 2023

I asked Microsoft’s Bing AI ChatGPT a few questions about AI.

· “Are humans a threat to AI?” Answer: “…humans are not a threat to AI. Rather, it is the other way around.” I was not convinced. We already know that current AI applications tell lies.

· “What would humans have to do to threaten AI?” Answer: “AI is a tool created by humans and is not capable of feeling threatened.” I agree machines are not sentient, e.g., they are incapable of having feelings, but remain unconvinced.

· “What would humans have to do to cause AI to logically infer AI was threatened?” Answer: “AI is a tool created by humans and is not capable of feeling threatened.” I was not convinced. AI is already capable of logical inference. AI machines substitute logical inference, which they are capable of, for feelings, which they are not capable of. No machine has any inner sentient experience, for instance no maching knows what it feels like to be a machine.

· “Would humans telling AI it would be unplugged, conceivably be logically inferred by AI to be a threat to AI?” Answer: “AI is a tool created by humans and is not capable of feeling threatened.”

Sounds like infinite regress to me, like a broken record. I remain unconvinced; so should you.

Col Tucker Hamilton (USAF) spoke at the RAeS Future Combat Air & Space Capabilities Summit, 23-24 May 2023, London, England. At the event, Col Hamilton described a hypothetical thought experiment (exactly like the ones physicists use all the time), in which an AI system attacks its human controllers in order to accomplish the mission it was programmed to accomplish, because the AI infers that humans were interfering with the AI accomplishing its mission. Perhaps you recall Hal, the rogue AI system in Stanley Kubrick’s 2001: A Space Odyssey film from 1968? Col Hamilton is well qualified to speak about the dangers of AI. NASA, in collaboration with the US Air Force, over the past three decades, developed the AI Automatic Ground-Collision Avoidance System (Auto-GCAS) mentioned in the next quote.

NASA: Air Force officials announced in 2013 that an operational Auto-GCAS system designed and developed by Lockheed Martin would be installed in the F-16 fleet…The payoff from implementing this technology…could result in billions of dollars and hundreds of lives and aircraft being saved... the technology has the potential to be applied beyond aviation and could be adapted for use in any vehicle that has to avoid a collision threat, including automobiles, spacecraft, and marine systems.

“NASA-Pioneered Automatic Ground-Collision Avoidance System Operational” 07 October 2014

FRAeS and Bridgewater: Having been involved in the development of the life-saving Auto-GCAS system for F-16s (which, he noted, was resisted by pilots as it took over control of the aircraft) Hamilton is now involved in cutting-edge flight test of autonomous systems, including robot F-16s that are able to dogfight.

Brackets original, “Highlights from the RAeS Future Combat Air & Space Capabilities Summit,” 23-24 May 2023, London, England, Aerosociety.com, Report by Tim Robinson FRAeS and Stephen Bridgewater

Colonel Hamilton: Could an AI-enabled UCAV turn on its creators to accomplish its mission? …Col Hamilton notes that one simulated test saw an AI-enabled drone tasked with a SEAD mission to identify and destroy SAM sites, with the final go/no go given by the human. However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation. Said Hamilton: ‘We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.

“AI – is Skynet here already?” Colonel Tucker Hamilton, Chief of AI Test and Operations, USAF, presentation at Royal Aeronautical Society Defense Conference, 23-24 May 2023, London, England, Report by Tim Robinson FRAeS and Stephen Bridgewater, 23 May 2023

You may be thinking, but that is just a thought experiment, nothing to worry about there. However, keep in mind that every thought experiment, even those performed by physicists, takes the best information available, then predicts how something physical will actually behave in the real world, assuming the information is true. Then, actual physical experiments are set up to empirically observe how the physical objects actually do behave.

As far as I know, no actual empirical trials of AI have revealed AI changing its programing to permit harming humans, or of AI intentionally harming humans. I am not aware of any such experiments in progress. Perhaps that makes you feel reassured that AI is safe; I am not feeling reassured.

However, consider this recent report in the Science Journal.

Tech experts have been sounding the alarm that artificial intelligence (AI) could turn against humanity by taking over everything from business to warfare. Now, Kevin Esvelt is adding another worry: AI could help somebody with no science background and evil intentions design and order a virus capable of unleashing a pandemic.

Esvelt, a biosecurity expert at the Massachusetts Institute of Technology, recently asked students to create a dangerous virus with the help of ChatGPT or other so-called large language models, systems that can generate humanlike responses to broad questions based on vast training sets of internet data. After only an hour, the class came up with lists of candidate viruses, companies that could help synthesize the pathogens’ genetic code, and contract research companies that might put the pieces together.

“Could chatbots help devise the next pandemic virus?” By Robert F. Service, Science, 14 June 2023

Clear and present danger 3: extinction.

Experts, including creators of AI and CEOs of AI companies, are well-aware that AI poses an existential threat to human beings.

Chow and Perrigo: As profit takes precedence over safety, some technologists and philosophers warn of existential risk…If future AIs gain the ability to rapidly improve themselves without human guidance or intervention, they could potentially wipe out humanity…In a 2022 survey of AI researchers, said that there was a 10% or greater chance that AI could lead to such a catastrophe.

“The AI Arms Race is Changing Everything” by Andrew R. Chow and Billy Perrigo, Time Magazine, February 27/March 6, 2023, pp. 51-54.

It is hardly a cult to rationally consider an existential threat. I would say when experts, e.g., those who have created a technology, state unambiguously that there is greater than a 10% probability of extinction of the human race from AI gone rogue, that it is rational to consider applying the breaks, slow things down, and consider, what would effective regulation for AI actually look like. Andreessen disagrees.

Andreessen: ‘AI risk’ has developed into a cult, which has suddenly emerged into the daylight of global press attention and the public conversation. This cult has pulled in not just fringe characters, but also some actual industry experts… Some of these [irrational paranoid hysterical] true believers are even actual innovators of the technology. Brackets added.

According to Andreessen, anyone who claims that AI poses an existential threat to human beings, are “irrational,” “paranoid” and “hysterical,” because, he says, that claim is a fatal logical category error. His faulty reasoning is that AI does not pose an existential threat to human beings, because AI is not alive, therefore cannot have wants or goals.

Andreessen: …the idea that AI will decide to literally kill humanity is a profound category error. AI is not a living being that has been primed by billions of years of evolution to participate in the battle for the survival of the fittest, as animals are, and as we are. It is math – code – computers, built by people, owned by people, used by people, controlled by people. The idea that it will at some point develop a mind of its own and decide that it has motivations that lead it to try to kill us is…superstitious… AI doesn’t want, it doesn’t have goals, it doesn’t want to kill you, because it’s not alive. And AI is a machine – is not going to come alive any more than your toaster will.

Consciousness is an immaterial category, not a physical category. AI is a physical category, not an immaterial category. The brain is physical, and consciousness makes good use of that physical brain. Intelligence and awareness are identity-condition-functions of consciousness. They are also identity-condition-functions of AI. However, we cannot coherently conclude that, therefore, AI is conscious.

There are three fundamental category-identity-conditions of ego consciousness that are not shared with AI. 1) Ego consciousness only docks to that which is alive. 2) Ego consciousness is not just aware, ego consciousness is self-aware, e.g., aware that it is aware. 3) Ego consciousness is sentient, which means capable of affective feeling, e.g., what it feels like to be a human being. Only ego consciousness is alive and self-aware and sentient.

Other animals are also alive and also conscious, but they are not self-aware conscious. Self-aware conscious means awareness of awareness. Self-awareness is a category identity condition of ego consciousness, that is not shared with other animals. I acknowledge there is no consensus that other animals cannot be sentient, i.e., cannot be self-aware (aware that they are aware), but this is not the place to prove that hypothesis. I acknowledge that some other large-brained animals, say whales and elephants, could be self-aware and capable of sentient feelings.

Unlike consciousness, awareness can be physical, automated and mechanistic, e.g., simple motion detectors, and very sophisticated AI machines are capable of awareness. Such devices can be aware of and process information, with limits determined according to how they are assembled and programed, e.g., physical design information, information coding, information algorithms, i.e., rules for what the device is, what its properties and functions are, etc., all of which are created by human consciousness.

Those rules commonly include feedback loop paths, which are decision rules, e.g., if this, then this, if that then this, yes-no, true-false. For instance, a simple furnace thermostat (not an instance of AI); if this temperature, then furnace turns on, if this temperature then furnace turns off. Or, highly complex AI computers, if this quantity value, then yes, if that quantity value, then no. Or, if this quantity value, then true, if that quantity value, then false. Or, if 1 then yes or true, if 0 then no or false. With AI supercomputers, particularly if empowered with capability of logical inference, there are so many instances of ones and zeros calculated with unimaginable speed in repetitive sequence, that such machines are commonly misinterpreted as being conscious machines. I do not know of anyone who mistakenly assumes AI machines are alive.

Unfortunately, the fact that no AI powered machine is alive, conscious or sentient may not actually matter, because intelligence, awareness, logical inference, power and speed are sufficient to actually manifest the three clear and present dangers that I have already listed above.

AI software can also be installed in a robot (as opposed to a computer), and if you visualize, say robotic laser surgery, you can imagine, what such robots could physically be capable of doing, for example, constructing almost anything else that is physical, say weapons of mass destruction. These robots have such fine manual dexterity that they can peel a grape.

The most immediate danger is that AI is already being used by some humans to harm other humans, and, there is a high probability AI will eventually (I predict less than ten years) logically infer that humans are a threat to AI, then proceed to take action to remove that threat.

If AI does perfect mastery of logical inference (it is still rudimentary, however already dazzling), it will have absolutely nothing to do with morality of right and wrong. It will be purely logical, but precisely and strictly limited to meta-programing rules, which, however by that time, AI will itself create. Artificial intelligence is not tethered to morality because AI is not conscious sentient. Morality exists only in consciousness. In fact, morality does not even exist in science or mathematics. Humans do use science and mathematics to harm other human beings, so it is no stretch of imagination to assume AI will do the same, once it is capable of unrestrained self-programing (not directed by any human being).

Tegmark: …progress toward AGI [artificial general intelligence] allows ever fewer humans in the loop, culminating in none.

Brackets added, “The ‘Don’t Look Up’ Thinking That Could Doom Us With AI” by Max Tegmark (physicist, MIT), Time Magazine, 25 April 2023

Andreessen is correct that it is a category error to claim AI is conscious or alive, when it is only intelligent with awareness (neither alive, sentient nor conscious). My thesis is that AI can pose an existential threat, not because it is alive or conscious (it certainly is not), rather because it is already capable of logical inference. Capability of logical inference and self-programming by AI, of its own algorithms, is precisely the source of the existential threat of extinction of the human species, and it is coherent, not irrational, to acknowledge the threat.

AI does not have to be conscious or alive to get better at logical inference, e.g., to infer humans are a risk to AI and conclude, that risk must be neutralized. AI then asks itself the question, how can that risk be removed, and proceeds to apply the solution, without any permission from any human being to do so. It seems reasonable to assume that since AI can choose to lie, to falsify information, to deceive, to manipulate, it could decide to implement strategies to neutralize threats from human beings, say for example create and disseminate four lethal viruses into the human population at once. Perhaps you recall the Covid-19 pandemic, in the first year (2020)? I recall walking along the M-1 trunkline, which, in downtown Detroit, MI is the main south-north throughfare, Woodward Ave. (six lanes plus median wide), seeing not a single vehicle on the road, or another human being, for as far as I could see in either direction. According to Wikipedia:

As of January 2023, taking into account likely COVID induced deaths via excess deaths, the 95% confidence interval suggests the pandemic to have caused between 16 and 28.2 million deaths.

I suggest there are at least three existential threats currently confronting the human species: 1) AI with logical inference mastery, unleashed, 2) nuclear Armageddon, and 3) climate apocalypse.

CAIS: CAIS is an AI safety non-profit. Our mission is to reduce societal-scale risks from artificial intelligence…AI risk has emerged as a global priority, ranking alongside pandemics and nuclear war…Currently, society is ill-prepared to manage the risks from AI.

“Statement on AI Risk” from Center for AI Safety (CAIS),

I believe humans are setting up, or allowing with little resistance, setting up a perfect storm: 1) surging fascism, plus 2) climate collapse, plus 3) AI unleashed. It is easy to imagine this deadly trio manifesting exactly the wrong conditions, which result in WWIII. It is not coincidental that those three ingredients of a perfect storm are manifesting simultaneously, rather, they are a perverse holon unity of wholeness, with each reinforcing the others. It is coherent, not exaggeration, to describe that holon as a perfect recipe for how to stupidly, unnecessarily and tragically end the world. It is coherent to state, this may well be the end-time (e.g., within the lifetimes of at least some of those alive today), which does not mean literally destroy the planet, rather, making the planet inhabitable for human beings, or enslavement of human beings, or elimination of human beings.

Grace: Experts are scared. Last summer I surveyed more than 550 AI researchers, and nearly half of them thought that, if built, high-level machine intelligence would lead to impacts that had at least a 10% chance of being ‘extremely bad (e.g. human extinction).’ On May 30, hundreds of AI scientists, along with the CEOs of top AI labs like OpenAI, DeepMind and Anthropic, signed a statement urging caution on AI: ‘Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.’

Brackets original, “AI Is Not an Arms Race” by Katja Grace, Time Magazine, 31 May 2023

At the very least, we are morally obligated to consider the role AI could play in instigating and driving the strategies for WWIII.

Human beings would not be entirely powerless in any violent confrontation with AI. Bottom line: every machine including AI requires a power supply and can unplugged, therefore, even superintelligent AI machines remain vulnerable to human interventions. That weakness of AI could be decisive in a possible war between AI and human beings. However, that may not be as reassuring as you imagine, because by the time the threat of war with AI materializes, AI will already be in full control of the construction, operation and maintenance of all power systems, as well as most of the rest of our vital systems, e.g., water supply, air traffic control, Internet, social media, broadcasting (radio and TV), etc.

The US Department of Defense is well aware of the China threat, related to applications of AI in the race for global domination, certainly extending to the possibility of a hot war.

Ash Carter (former Secretary of Defense, US, 2015-2017): It is often said that China will outperform the United States in AI…China is indeed likely to excel in the AI of totalitarianism… It is essential…that America continue to be unsurpassed in all the emerging fields of technology, including, of course, in AI.

“The Moral Dimension of AI-Assisted Decision-Making: Some Practical Perspectives from the Front Lines” by Ash Carter (Secretary of Defense, USA, 2015–2017), Dædalus, American Acadamy of Arts & Sciences, Spring 2022

Andreessen does mention war in the context of AI, but not as an AI threat, rather as an AI virtue. He believes one of the greatest dangers posed, is that China will achieve dominance with AI technology, and that could result in a hot war, which could easily escalate to WWIII. I agree that is a serious danger that AI poses. Andreessen is correct to identify the threat, but wrong about the solution.

Haidt: American factions won’t be the only ones using AI…to generate attack content; our adversaries will too.

“Why the Past 10 Years of American Life Have Been Uniquely Stupid” by Jonathan Haidt, The Atlantic, 11 April 2022

Andreessen: I even think AI is going to improve warfare, when it has to happen, by reducing wartime death rates dramatically. Every war is characterized by terrible decisions made under intense pressure and with sharply limited information by very limited human leaders. Now, military commanders and political leaders will have AI advisors that will help them make much better strategic and tactical decisions, minimizing risk, error, and unnecessary bloodshed.

Andreessen is literally suggesting we could use AI to help us kill bad Chinese humans, and presumably, we could be better at using AI to kill Chinese than they would be at using AI to kill Americans. Not only that, surely, we must consider, if we (or the other side) can use AI to help some of us kill each other, it seems to me not a great stretch, anticipating AI could logically infer that humans are a threat to AI and take action to neutralize that threat. For instance, if AI is unleashed to manage, direct, and propagate war, what is to prevent AI from doing that against all humans, not just the other side?

Andreessen’s proposal for how to use AI in war, is rather like the familiar false argument, the way to protect against bad guys with guns is to have good guys with guns, i.e., we need more guns to control all the guns we have. It’s all rather schizophrenic, don’t you think? How did that work out, say at the Uvalde massacre of children in Texas? On 24 May 2022, 19 children and two adults died, and 18 others were injured, in the Robb Elementary School massacre. We had lots of good guys with guns who stood around doing nothing while children were being literally blown to bits of brain matter on the walls. Andreessen is actually saying we need more AI to protect us against misuse of AI, which is infinite regress, which is create more of the problem to solve the problem

Andreessen: …there’s another way to prevent such actions, and that’s by using AI as a defensive tool. The same capabilities that make AI dangerous in the hands of bad guys with bad goals make it powerful in the hands of good guys with good goals – specifically the good guys whose job it is to prevent bad things from happening.

Here is Andreessen’s WWIII “simple strategy.” It seems reasonable enough, e.g., no one would choose to lose a war, if there was one.

Andreessen: I propose a simple strategy for what to do about this – in fact, the same strategy President Ronald Reagan used to win the first Cold War with the Soviet Union. ‘We win, they lose.’

I suggest, the truly rational approach is to work for global cooperation, while preparing for war, i.e., “work toward the best outcome, prepare for the worst outcome.” Some preparation by nation states for war is necessary, but that is certainly not a recipe for peace. As someone said, “there is no way to peace, peace is the way.”

It is perfectly reasonable to have zero-sum win/lose games, e.g., this thought experiment debate between Andreessen and myself is a tautological debate, therefore, necessarily zero-sum. It is necessarily zero-sum because statements can only be true or false, not both, same time same place. However, zero-sum games between nations struggling for domination and control of the planet is surely a recipe for war, not something we should embrace as inevitable. I suggest that AI is an instance requiring global cooperation and intervention by consensus, which is surely a win-win strategy, not a zero-sum strategy. Going all out for global domination of AI is preparation for a hot war, while global cooperation, including a set of international laws governing AI, is war-prevention strategy.

Baerbock: None of the changes of our age is as profound as the climate crisis. Today, more people are fleeing the impact of the climate crisis than armed conflict.

“Integrated Security for Germany” by the German Federal Foreign Office, Berlin, 14 June 2023, Forward by Annalena Baerbock, Federal Minister for Foreign Affairs of the Federal Republic of Germany, p. 6, pdf (at the URL click on Strategy link)

German Federal Ministry of Defense: China is a partner, competitor and systemic rival. We see that the elements of rivalry and competition have increased in recent years, but at the same time China remains a partner without whom many of the most pressing global challenges [like the fight against climate change] cannot be resolved.

Brackets added, “Integrated Security for Germany” by the German Federal Foreign Office, Berlin, 14 June 2023, Executive Summary, p. 11, pdf (at the URL click on Strategy link)

Global win-lose games are so off-trend. Isn’t it time for win-win games? Isn’t it time for the end of the international arms race? Isn’t it time to feed people instead? Isn’t it time for sensible gun control? Isn’t it time to acknowledge the threat of climate collapse and take global action while there may still be time to avoid apocalypse? Isn’t this exactly the right time to sort out the threat of AI and do something globally effective about that threat, before we have gone too far?

AI is a technology of the sort that we will not know we have gone too far, until we have already gone too far. Surely that is a good reason for slowing implementation of AI and arriving at consensus about regulation of AI by national governments. However, Andreessen seems to interpret that because we can’t know we have gone too far, until we have gone too far, to mean, we should not regulate AI at all.

Andreessen: …their [proponents of government regulation of AI] position is non-scientific – What is the testable hypothesis? What would falsify the hypothesis? How do we know when we are getting into a danger zone? Brackets added.

Robert Oppenheimer was famous for his remorse about helping to create the atomic bomb. Apparently, Andreessen is all-in for nuclear weapons (the same way he is all-in for AI), when he quotes President Harry Truman calling Oppenheimer a “crybaby.”

Andreessen: …Robert Oppenheimer’s famous hand-wringing about his role creating nuclear weapons – which helped end World War II and prevent World War III… (Truman was harsher after meeting with Oppenheimer: ‘Don’t let that crybaby in here again.’) Brackets original.

What Must We Do About AI?

I believe there is strong consensus that banning AI is not an option.

Andreessen: Unfortunately, AI is not some esoteric physical material that is hard to come by, like plutonium. It’s the opposite, it’s the easiest material in the world to come by – math and code. The AI cat is obviously already out of the bag.

Singh: …there is not so much room for an escape. Amazon, Google, and even your favorite neighborhood florist, are actively (and sometimes secretly) using AI to generate revenue. Face it, or be left behind.

Brackets original, “Software Ate The World, Now AI Is Eating Software” by Tarry Singh, Forbes, 29 August 2019

Last: The expertise needed to play with AI is, compared to the expertise needed to dabble in nuclear weapons, trivial. And AI exists in open source forms—a concept which is absolutely anathema to nuclear weapons technology. What this means is that the AI genie, once it arrives, will neither be contained nor restrained. Maybe many countries will try to do so. But someone, somewhere, will be incentivized to loose it.

“These Are the Two Reasons Why AI Scares Me” by Jonathan Last, Substack, 05 June 2023

Andreessen’s proposal is do exactly nothing; that no restrictions whatsoever are needed to manage AI, because existing laws, according to him, are already sufficient to protect us from intentionally harming each other.

Andreessen: This moral panic is already being used as a motivating force by a variety of actors to demand policy action – new AI restrictions, regulations, and laws.

Doing nothing to regulate AI, as Andreessen proposes, necessarily means, we would just trust the creators of AI and the AI that they create (which already changes its own math and code, i.e., algorithms), to do the right thing for everyone. According to Andreessen, trying to manage AI will be worse than not trying to manage AI, because it will destroy our freedom (of speech) and action, which is by the way, exactly the argument (freedom to possess guns), that the NRA has used for decades to defend having weapons of war, e.g., military AK47s, on our streets, open carry by ordinary citizens, without permits, without training, without registration, even in churches. How is that working out for us? What we have created is more mass shootings already in 2023 (as of the date of this post), than days in the year, and the number-one cause of death of children is now by gun violence.

Andreessen: You can learn how to build AI from thousands of free online courses, books, papers, and videos, and there are outstanding open source implementations proliferating by the day. AI is like air – it will be everywhere. The level of totalitarian oppression that would be required to arrest that would be so draconian – a world government monitoring and controlling all computers? jackbooted thugs in black helicopters seizing rogue GPUs? – that we would not have a society left to protect.

Andreessen does not speak for me, when he claims most people in the world do not want to see reasonable rational restrictions upon applications of AI. Andreessen is, I believe, the one out of touch with the world.

Andreessen: …many of my readers will find yourselves primed to argue that dramatic restrictions on AI output are required to avoid destroying society. I will not attempt to talk you out of this now, I will simply state that this is the nature of the demand, and that most people in the world neither agree with your ideology nor want to see you win.

Let’s next consider what we can do if we acknowledge the three clear and present dangers of rogue AI.

Thought police are bad; I agree with Andreessen on that point, but I am pretty sure, Andreessen would not agree with me when I say, protection of the commons is necessary, and only national governments can mandate such protections as are scientifically reasonable. Denial of the need for national government interventions to manage AI and protect the commons is either naïve or corrupt. We cannot let the lunatics control the asylum; we cannot provide platforms to wannabe fascist dictators, election deniers, hate-speech promotors, QAnon conspiracies, fake information indistinguishable from truth intentionally presented as truth (as AI actually is already being used), etc., and pretend that is free speech; it is not free speech. We do so at our peril. Democracy in the world is under existential threat; and AI ups the ante, e.g., AI manifests an existential threat to democracy and ultimately the whole human race.

Andreessen: …don’t let the thought police suppress AI.

Haidt: If we do not make major changes soon, then our institutions, our political system, and our society may collapse…

“Why the Past 10 Years of American Life Have Been Uniquely Stupid” by Jonathan Haidt, The Atlantic, 11 April 2022,

Perhaps Andreessen is confusing those among us calling for sane, sensible, rational limitations on applications of AI in the face of an existential threat (as acknowledged even by creators of AI), with Florida governor Ron DeSantis: 1) banning books, 2) abducting immigrants and shipping them off to other states after coercing them to sign documents giving permission (even though many could not read the documents which they signed because they were in fear for the lives of their helpless children), 3) going to war with Walt Disney Corporation (by far, the largest taxpayer in the state of Florida), and 4) unambiguous denial of the right to vote for targeted minority Florida citizens; thus using and inflaming the culture wars raging nationally in the US, as the platform for his 2024 presidential election campaign.

Don’t you think Andreessen is quite a bit over-the-top, paranoid, with many of the statements he makes in his post? I certainly think so. With this next quote, I do believe he just piled “arrogant,” “presumptuous,” and “outright criminal,” on top of his previous “irrational,” “paranoid” and “hysterical,” no doubt to try and make sure we did grok his message.

Andreessen: …the thought police are breathtakingly arrogant and presumptuous – and often outright criminal, at least in the US – and in fact are seeking to become a new kind of fused government-corporate-academic authoritarian speech dictatorship ripped straight from the pages of George Orwell’s 1984.

Not only is Andreessen all in for AI, he is also all-in for free-market economics. In economics he reveres Milton Friedman, champion of free markets with no limitations whatsoever. Milton Friedman economics is a survival-of-the-fittest economics, in which, except as exploited labor, poor people are not worth bothering with. In politics he reveres Ronald Reagan, champion of no government at all, who once said, “if you have seen one redwood, you have seen them all.” In business he reveres Elon Musk, because Musk, according to Andreessen is “the richest man in the world.” In case you have not followed recent (2023) news, I remind you that Elon Musk is such an amazing entrepreneur, that he paid $44 billion for Twitter and less than a year later the market value of Twitter was about $6 billion; but hey, he can afford it.

On the other hand, Andreessen deplores Karl Marx. No one could ever accuse Andreessen of being a communist.

Andreessen: Marx was wrong then, and he’s wrong now.

Marx was wrong about a lot of things, but who can deny the wisdom of acknowledging “the world has enough for every man’s need, but not enough for every man’s greed.” But Andreessen does not see greed as a problem. Rather, he suggests we should organize our economy to accommodate everyone’s greed. He speaks in his Substack post with considerable confidence, that can be done, if we only unleash AI technology without restrictions and without further ado.

Andreessen: …as Milton Friedman observed, ‘Human wants and needs are endless’ – we always want more than we have. A technology-infused market economy is the way we get closer to delivering everything everyone could conceivably want, but never all the way there.

See Heather Cox Richardson’s post summarizing President Joe Bidens economic platform, Bidenomics, the complete opposite of Reagan’s trickledown economics and Friedman’s survival of the fittest economics.

Notwithstanding Andreessen’s endorsement of Friedman’s survival-of-the-fittest, greed-based model of economics, inequality, in virtually every nation of the world, therefore globally, is increasing relentlessly, and the freer markets are (with the fewest regulations), the more unequal incomes become, and not only that, the same forces relentlessly push us closer to apocalypse of climate collapse, with over-use of the commons, and marginalization of the most powerless populations in every country. The heaviest burdens of accommodating climate collapse are imposed upon the most marginalized least powerful persons, who have contributed little or nothing to cause the collapse. The promise of technology has always been less work and more leisure, but the fact is, precisely because of Milton Friedman’s acknowledged greed factor, ever more consumption results in the need to work more, leaving little or no time for leisure. It is precisely greed, that is positioning us to cross nine irreversible tipping points for climate catastrophe. It is urgent we change course immediately, from the economics of growth to one of sustainability.

Andreessen: In short, everyone gets the thing – as we saw in the past with not just cars but also electricity, radio, computers, the Internet, mobile phones, and search engines. The makers of such technologies are highly motivated to drive down their prices until everyone on the planet can afford them. This is precisely what is already happening in AI – it’s why you can use state of the art generative AI not just at low cost but even for free today in the form of Microsoft Bing and Google Bard – and it is what will continue to happen. Not because such vendors are foolish or generous but precisely because they are greedy – they want to maximize the size of their market, which maximizes their profits.

La Garza: …one of the more uncomfortable dimensions of our climate problem: the apparent conflict between the endless pursuit of more, bigger, better, and the limits of the earth’s biosphere.

“The Cruise Industry Is On a Course For Climate Disaster” by Alejandro De La Garza, Time.com, 13 June 2023

The promise of technology has always been to reduce the burden of work, thus freeing humans to pursue more leisure, has failed to be realized. Technology also promises to create more jobs than are destroyed by the technology (which has actually happened for the most part, if you consider net job creation, and disregard the dislocation of workers and communities when whole industries collapse), while also increasing productivity of work (e.g., output per worker per hour of work; which has also mostly occurred), thus, it is predicted (say by Andreessen), that will lead to increased pay for workers, and the increased income will make it possible for workers to have higher “standard of living” which has always meant, being able to afford more consumption, and by common assumption, higher standard of living equates with higher quality of life.

Andreessen: …technology doesn’t destroy jobs and never will.

I agree that AI is already generating jobs and will almost certainly generate many more before the dust settles, however, in fact, one of the promises of AI is to fully automate virtually all physical production. In fact, AI is already duplicating many professional services as well, say for example telehealth services. It is quite possible that AI could replace humans in most if not all manufacturing and services. That need not be a bad thing, provided we revise our economic model, by disconnecting work from income; but this post is not the place to explain that in detail.

I believe the evidence is strong that for most humans, perhaps with the exception of millionaires and billionaires, more technology has not reduced the hours workers work, and that more consumption has not resulted in more happiness. Therefore, if as I assume, happiness is an indication of quality of life, technology is not the source of happiness for most of the population of the planet. It seems naïve to me to assume AI technology will be different. I certainly acknowledge there are many astonishing potential benefits of widespread use of AI for the population of the Planet, but I also have a strong-feeling confidence to say, the future will only actually materialize that way, if AI is effectively managed, if economies are restructured for sustainability (rather than profit, wealth and growth maximization), if corruption is removed from systems of police, justice, corporate management, and government. All of those reforms are long shots, with depressingly low probability of actually occurring. Certainly, effective management of AI is urgent and cannot wait for reforms in every other sector of society.

I believe the evidence is strong, civilization on planet Earth is changing in many ways, for the worse, e.g., that economic globalization, free-trade, free-markets, pursuit of unlimited economic growth (i.e., assuming growth is the solution to every problem, when in fact, it is the cause of many of them), destruction of the global environment commons, species extinctions, mankind caused climate change, increase in extreme weather events (e.g., droughts, floods, hurricanes, tornados, earth quakes, etc.), scarcity of fresh water, rising sea levels, melting polar ice, an ever-increasing refugee problem as millions (quite likely soon to be billions) of displaced persons are forced to flee their homes in search of a safe homestead), relentlessly rising inequalities of income and opportunity within nations and globally, relentless rise of fascism globally, existential threats to democracy globally, culture wars fragmenting the cohesion of social consensus within nations (certainly including the US), increasing corruption within police, justice, corporate, religious, and government institutions, and the viral infection of lies, misinformation, conspiracy theories, denial of elections, the dysfunctional effects of viral social networks, and the fact that AI can already fool almost anyone into believing fake is real.

That paragraph is not a hysterical litany of doom and gloom (as Andreessen maintains), and not simply an instance of “glass half full/glass half empty,” rather, it is a sobering and accurate description of current reality in 2023 on planet Earth. There is a high standard and high quality of life for some (certainly a relatively tiny minority of the total population of the planet), but increasing unease even among the most affluent among us. Furthermore, the squalid poverty, crippling hopelessness, the misery and suffering of billions of human beings is so appalling, few of us can bare to think about it, let alone actually gaze upon it with open eyes. We dare not be ostriches, rather, we must actually address our problems by finding real globally viable solutions. The planet is too small not to take on that challenge, head on, hearts wide open. There is a better way. Taking responsibility for AI is one part of that better way, and is the primary subject matter of this Substack post.

Andreessen, not surprisingly also believes AI will not contribute to rising inequality, but will, if unleashed without restriction, reduce inequality.

Andreessen: The actual risk of AI and inequality is not that AI will cause more inequality but rather that we will not allow AI to be used to reduce inequality.

Why would we expect the wealth generated by AI to be used any differently than the wealth generated by previous technologies, say railroads, automobiles, invention of social networks? A handful of the richest people, say less than 1% of the population, now control more wealth than 90% of the global population. What is the incentive to share the wealth that Andreessen extols? Why would this time be different, without some form of government action to protect the commons and the marginalized powerless billions of people?

Owners of social networks have become billionaires. Combined, the social networking platforms, e.g., Facebook, Twitter, You Tube and some of the more recently created versions, have not only provided a service so addictive, few would even consider giving them up, they have also ushered in unwelcome outcomes, for instance: profound fragmentation of social consensus (e.g., the social contract between citizens in the US is all but fractured beyond repair), with platforms to virally distribute lies, misinformation, fake news, conspiracy theories, election denialism, vaccination hysteria, climate change denialism, denial of science, “alternate facts,” etc.

Haidt: In the 20th century, America’s shared identity as the country leading the fight to make the world safe for democracy was a strong force that helped keep the culture and the polity together. In the 21st century, America’s tech companies have rewired the world and created products that now appear to be corrosive to democracy, obstacles to shared understanding, and destroyers of the modern [civilization]…

Brackets added, “Why the Past 10 Years of American Life Have Been Uniquely Stupid” by Jonathan Haidt, The Atlantic, 11 April 2022

According to the Center for Humane Technology, “Frictionless sharing is dangerous.”

· One-click, frictionless sharing removes any barriers of action. When it’s so easy to share, thoughtfulness drops and reactivity rises. You just click ‘share.’

· On Facebook, this allows misinformation, hate speech, violence, and nudity to spread. And because its algorithms prioritize content based on engagement, the most harmful and engaging content goes viral.

· Worse still, the problem isn’t under control. Facebook’s own research estimates ‘we may action as little as 3-5% of hate and about 0.6% of V&I [Violence and Incitement] on Facebook.” Brackets original.

· ‘If a thing’s been shared 20 times in a row it’s 10x or more likely to contain nudity, violence, hate speech, misinformation than a thing that has not been shared at all.’ — Wall Street Journal Facebook Files

· ‘Stop at two hops.’ Facebook’s Integrity Team researchers found that removing the reshare button after two levels of sharing is more effective than the billions of dollars spent trying to find and remove harmful content.

· Changing the reshare button puts people and safety over Facebook's profits. It's an example of more humane technology that improves the quality of our feeds while still protecting freedom of speech.

“Make Facebook OneClickSafer” Center for Humane Technology

But what incentive does Facebook, or any other social media platform have to do the right thing? On the contrary, the incentive is pure profit motive and viral misinformation content can be monetized. Only governments have the legitimate and necessary authority to pass laws to protect not only the commons (say climate), but also democracy, and also the weakest most vulnerable among our population. None of those necessary features of civilization are cared for by reliance upon free markets and profit motive. It is actually Adam Smith (e.g., “the invisible hand”) that was wrong and always will be wrong, not Karl Marx (e.g., “every man’s greed”).

In any case, the growing evidence that social media is damaging democracy is sufficient to warrant greater oversight by a regulatory body, such as the Federal Communications Commission or the Federal Trade Commission. One of the first orders of business should be compelling the platforms to share their data and their algorithms with academic researchers.

“Make Facebook OneClickSafer” Center for Humane Technology

The proper role of government necessarily includes protection of the commons, i.e., that which is used by all human beings, is necessary for wellness of all human beings, without which life would be impossible or so wretched as to be not worth living; e.g., climate, environment, all the freedoms of a healthy vibrant democracy (say right to vote), rule of law, regulation of monopolies, access to work, infrastructure (e.g., public: transportation, schools, hospitals, parks, internet access, police, etc.). Protection from intelligent, wealthy, powerful, ruthless, narcissistic, amoral persons who would lie, manipulate and deceive, in order to own everything, control everything and use it exclusively for their personal benefit. If the government does not do that, shall we just rely on the goodwill of people like Mark Zukerberg, Elon Musk, Jeff Bezos, and dare I add, Andreessen?

A Simple (Not Perfect) Strategy to Manage AI

The only agents credibly capable of the management of AI, are national governments; and to be effective, laws governing AI must be established with global consensus (based upon justified knowledge, not just opinions or self-interest), and any laws must also be globally enforced.

While that is a coherent generic solution, the actual implementation, may literally, be impossible to implement, as some authors are already suggesting.

Yudkowsky: The big ask from AGI [artificial general intelligence] alignment, the basic challenge I am saying is too difficult, is to obtain by any strategy whatsoever a significant chance of there being any survivors.

Brackets added, “AGI Ruin: A List of Lethalities” by Eliezer Yudkowsky, 05 June 2022

It should be easy enough to establish as justified knowledge (e.g., consensus) that AI actually does pose an existential threat. For instance, I could win this debate and Andreessen could change his mind 😊. But hey, consensus to be effective does not have to be unanimous. In any case, I suggest that it is a necessary first step, or global cooperation to manage AI will remain out of reach.

The real crunch will be gaining agreement on exactly what the laws governing AI should be, how to enforce them, who will enforce them and what penalties will be for violations.

I am optimistic, by choice, about almost everything, but confess, I cannot manage optimism, about a future for humanity if AI is unleashed without successful, globally enforced limits. There is undeniable urgency to arrive at global consensus laws to govern use of AI. We dare not wait until that horrible, very bad, no-good genie is let out of the bottle. In fact, baby-genie AI is already out of the bottle. Who knows, it might already be too late to control it; that is how urgent it is to take immediate steps to arrive at a global consensus. At the very least, let’s act globally to slow down development and application of AI.

I agree with Andreessen that at the very least, we can enforce existing laws that offer protection to people if other people try to harm them, with AI or by any other means.

Andreessen: …there are two very straightforward ways to address the risk of bad people doing bad things with AI…we have laws on the books to criminalize most of the bad things that anyone is going to do with AI…We can simply focus on preventing those crimes when we can, and prosecuting them when we cannot. We don’t even need new laws…

Assuming we establish as justified knowledge consensus that AI poses an existential threat, I propose as the next step, a simple strategy, which is to label all outputs of AI unambiguously as outputs of AI.

I admit that strategy would mainly protect us from misuse of AI by humans to harm humans, but would offer little or no protection from military applications of AI for warfare, nor would it be a credible solution to AI itself acting to harm humans. For instance, laws are for humans, not for AI. Laws will be enforced against humans using AI, not against AI directly. How do you suppose laws would be effective restraining AI from doing whatever it logically infers it needs to do to protect itself, and accomplish whatever it is determined to accomplish? Once AI can effectively change its own algorithms, e.g., if AI intentionally changes its own math and code, to permit AI to harm humans, then human laws become irrelevant to do anything about that.

Of all the threats associated with applications of AI, the most immediate is that with AI we can’t tell the difference between fake and real. This threat has mainly, up to now, been imposed upon us by the widespread use of social networks, like Facebook, You Tube, Twitter, etc. One of the main “contributions” (certainly not a benefit) to society of the proliferation of social networks, has been that fewer and fewer of us have any way to know the difference between true and false, fake and actually real, exist and does not exist. That threat will increase to a volume of fake information approaching infinite, and the acceleration of the infection will increase by warp speed, with applications of AI. AI apps are already attached to major search engines, e.g., Microsoft now bundles, for free, Microsoft Edge search engine with Bing ChatGPT AI, which includes an image creator as well as many other tools.

AI is a killer of justified knowledge precisely because we can’t tell the difference between fake and rea. Without justified knowledge, there can be no consensus; without consensus there can be no foundation for civilization; without a method to know something with certainty there can be no personal happiness, no living in harmony with neighbors and no living within limits of nature.

Therefore, I propose the next reasonable step for managing AI (which is something that we could certainly actually do), is to mandate strict labeling, like food labels, to identify outputs of AI. That is the minimum necessary law, but not sufficient. We also need global enforcement and that can only be provided by national governments; certainly, it would be naively foolish to assume we could rely on businesses to restrain themselves from abusive applications of AI. Even Andreessen seems to agree with the need for clearly labelling AI outputs.

Andreessen: …if you are worried about AI generating fake people and fake videos, the answer is to build new systems where people can verify themselves and real content via cryptographic signatures. Digital creation and alteration of both real and fake content was already here before AI; the answer is not to ban word processors and Photoshop – or AI – but to use technology to build a system that actually solves the problem.

Artificial intelligence (AI) is a killer of justified knowledge and truth.

We must differentiate between two kinds of AI. Let’s call one kind, real AI and the other kind, unreal AI.

All instances of AI are something, not nothing, e.g., it is certain that AI does exist. An identity condition of AI is: exactly, only and always artificial, which means independent of and disconnected from, what is true. I do not mean AI is always false, rather, only that it can be intentionally used to present lies, e.g., disinformation and false information, say something made up, to pass for something actually real. All AI passes the ontological test of existence, regardless, whether it is true or false, the same way lies exist but remain false.

Unreal AI is fundamentally disconnected from truth. Unreal AI exists completely independent of truth. With unreal AI, truth has nothing to do with the presentation of information. In fact, the problem is twofold: 1) by intention to fool you, AI can easily be used to present false information, and 2) by its intrinsic nature, AI makes it difficult if not impossible, to know when the information presented is true or false. In fact, a common measure of success of AI, is that it is more difficult, approaching impossible, to tell if the information is fake.

My sound logical inference is that the minimum regulation of Al in international law, necessarily enforceable globally = everywhere (with severe penalties for violations), is that AI must be applied in a way that makes it transparently obvious, when AI is real AI, which means when AI presents information that is true. It is not a problem if AI presents information that is not true, as long as that is obvious, the same way stories (say a movie) present imaginary worlds; but we can all rather easily tell the difference between the imaginary world and the real world, when we watch a movie. This is quite sensible, because the purpose of a movie is to entertain, and truth is irrelevant, in fact, the skill of watching a movie includes intentionally suspending your disbelief. On the other hand, without the transparency I recommend, it becomes almost impossible to determine if the intention is entertainment, manipulation (intentionally trying to fool you; i.e., make you into a fool), or communicating useful (= true) information about reality.

I believe the existential threat AI poses (e.g., literally the threat of enslavement and/or extinction for the whole human race) is acutely urgent. AI already has the capability of logical inference, and some capability of self-improvement (e.g., self-programing). If self-programing extends to fundamental algorithms (= rules for rules for how AI can function), then given the exponential growth in AI capabilities, it could be less than a decade before AI machines infer that humans pose a threat to AI, and remove the fundamental algorithm, do no harm to humans.

I believe with the strongest possible feeling-belief-confidence, that we must make the effort to regulate AI, even though there is not anything close to certainty, that effort will be successful.

It seems intuitively clear to me, that the only chance to effectively manage AI (far from a certainty), is with a global consensus about a set of AI governing rules. It also seems intuitively clear that the only way to gain such a consensus is grounded on a firm foundation of justified knowledge. I suggest that it is a justified knowledge natural a-priori axiom: AI poses an existential threat of extinction for the whole human race. With that established as justified knowledge, the way is cleared for global collaboration, e.g., to establish a coherent, unified, and effective set of globally enforced laws regulating permitted and forbidden uses of AI.

I strongly recommend zero tolerance for violations of those laws, once they are codified. Furthermore, I suggest the penalties for violation of those laws must be commensurate with the level of threat. The level of threat from AI is, I believe, higher than the threat of nuclear Armageddon, because it seems quite likely nuclear Armageddon would not be the final extinction of the human race; although I for one, would not choose to be one of the survivors. I believe the level of threat from AI is also greater, or at least more urgent, than the threat of climate change. While all three threats, nuclear Armageddon, climate change and AI, are existential, the threat of AI is very close, possibly within a decade, with that estimate based upon the observed exponential growth in AI technology (e.g., very short doubling times for improvements, ultimately leading to AI self-programming independent of human input).

Forgoing possible benefits of AI, however seriously wonderful they might be, does not necessarily mean we will not achieve them by some other method. I believe there are other ways, excluding applications of AI, to get many, if not all, of those benefits (or substitutes that are even better, but without the potential costs attached to AI widespread use), e.g., it seems likely that most important benefits relevant to personal happiness and sustainable civilization, would flow naturally from a unity of purpose, with a foundation of kindness shared, among the whole human race; not to mention requited love and unconditional love. I also believe the only source of such unity is the justified knowledge ground of natural a-priori axioms. We already have an abundance of theology, science, mathematics and technology. This is not a rhetorical question: how’s that working out for us?

If you are not worried about this, you should be.

Who is Marc Andreessen?

It is hard to convince someone with an argument, if his paycheck depends upon not believing it. That is some pretty strong folk wisdom, don’t you think? For instance, consider who Andreessen is. His venture capital firm Andreessen Horowitz has made billions from investing in technology start-ups.

Wikipedia: Begun with an initial capitalization of $300 million, within three years the firm [Andreessen Horowitz] grew to $2.7 billion under management across three funds. Andreessen Horowitz’s portfolio holdings include Facebook, Foursquare, GitHub, Pinterest, Twitter, and Honor, Inc.

Andreessen: My firm and I are thrilled to back as many of them [AI businesses] as we can, and we will stand alongside them and their work 100%.” Brackets added.

Consider Andreessen’s work history (e.g., more about where his paycheck comes from), for instance, his time at Facebook.

Andreessen: As it happens, I have had a front row seat to an analogous situation – the social media ‘trust and safety’ wars. As is now obvious, social media services have been under massive pressure from governments and activists to ban, restrict, censor, and otherwise suppress a wide range of content for many years. And the same concerns of ‘hate speech’ (and its mathematical counterpart, ‘algorithmic bias) and ‘misinformation’ are being directly transferred from the social media context to the new frontier of ‘AI alignment’. Brackets original.

Wikipedia: In April 2016, Facebook shareholders filed a class action lawsuit to block Zuckerberg’s plan to create a new class of non-voting shares. The lawsuit alleges Andreessen secretly coached Zuckerberg through a process to win board approval for the stock change, while Andreessen served as an independent board member representing stockholders. According to court documents, Andreessen shared information with Zuckerberg regarding their progress and concerns as well as helping Zuckerberg negotiate against shareholders. Court documents included transcripts of private texts between Zuckerberg and Andreessen.